Word: for essential background on vector search, see half 1 of our Introduction to Semantic Search: From Key phrases to Vectors.

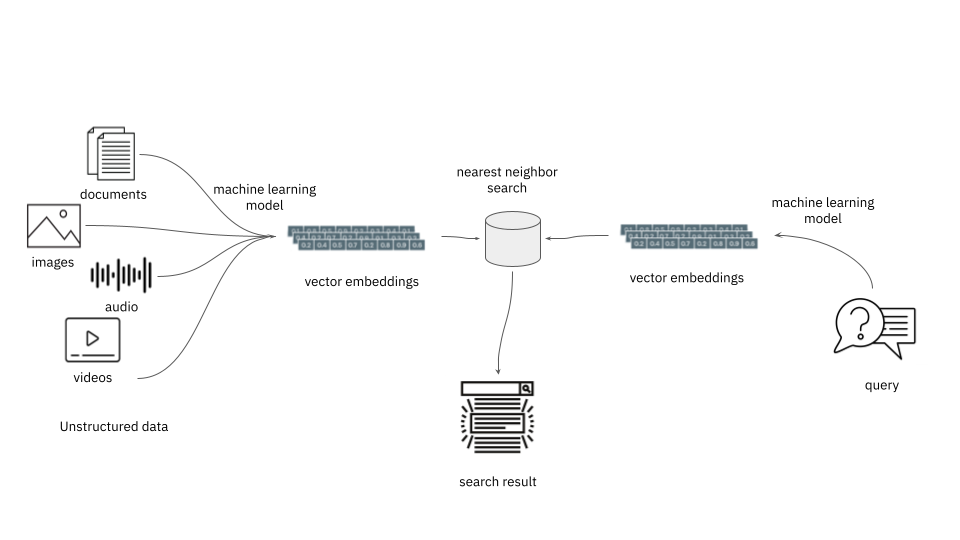

When constructing a vector search app, you’re going to finish up managing plenty of vectors, often known as embeddings. And one of the crucial widespread operations in these apps is discovering different close by vectors. A vector database not solely shops embeddings but additionally facilitates such widespread search operations over them.

The rationale why discovering close by vectors is helpful is that semantically related objects find yourself shut to one another within the embedding area. In different phrases, discovering the closest neighbors is the operation used to seek out related objects. With embedding schemes accessible for multilingual textual content, photographs, sounds, knowledge, and plenty of different use instances, it is a compelling function.

Producing Embeddings

A key choice level in creating a semantic search app that makes use of vectors is selecting which embedding service to make use of. Each merchandise you wish to search on will have to be processed to provide an embedding, as will each question. Relying in your workload, there could also be important overhead concerned in making ready these embeddings. If the embedding supplier is within the cloud, then the provision of your system—even for queries—will rely upon the provision of the supplier.

It is a choice that must be given due consideration, since altering embeddings will usually entail repopulating the entire database, an costly proposition. Totally different fashions produce embeddings in a unique embedding area so embeddings are usually not comparable when generated with completely different fashions. Some vector databases, nonetheless, will permit a number of embeddings to be saved for a given merchandise.

One widespread cloud-hosted embedding service for textual content is OpenAI’s Ada v2. It prices a few cents to course of one million tokens and is extensively used throughout completely different industries. Google, Microsoft, HuggingFace, and others additionally present on-line choices.

In case your knowledge is simply too delicate to ship exterior your partitions, or if system availability is of paramount concern, it’s potential to domestically produce embeddings. Some widespread libraries to do that embrace SentenceTransformers, GenSim, and several other Pure Language Processing (NLP) frameworks.

For content material aside from textual content, there are all kinds of embedding fashions potential. For instance, SentenceTransfomers permits photographs and textual content to be in the identical embedding area, so an app may discover photographs much like phrases, and vice versa. A number of various fashions can be found, and it is a quickly rising space of growth.

Nearest Neighbor Search

What exactly is supposed by “close by” vectors? To find out if vectors are semantically related (or completely different), you will have to compute distances, with a operate generally known as a distance measure. (You may even see this additionally known as a metric, which has a stricter definition; in observe, the phrases are sometimes used interchangeably.) Usually, a vector database can have optimized indexes primarily based on a set of obtainable measures. Right here’s a number of of the widespread ones:

A direct, straight-line distance between two factors known as a Euclidean distance metric, or typically L2, and is extensively supported. The calculation in two dimensions, utilizing x and y to characterize the change alongside an axis, is sqrt(x^2 + y^2)—however remember the fact that precise vectors might have hundreds of dimensions or extra, and all of these phrases have to be computed over.

One other is the Manhattan distance metric, typically known as L1. That is like Euclidean in the event you skip all of the multiplications and sq. root, in different phrases, in the identical notation as earlier than, merely abs(x) + abs(y). Consider it like the gap you’d must stroll, following solely right-angle paths on a grid.

In some instances, the angle between two vectors can be utilized as a measure. A dot product, or inside product, is the mathematical instrument used on this case, and a few {hardware} is specifically optimized for these calculations. It incorporates the angle between vectors in addition to their lengths. In distinction, a cosine measure or cosine similarity accounts for angles alone, producing a price between 1.0 (vectors pointing the identical course) to 0 (vectors orthogonal) to -1.0 (vectors 180 levels aside).

There are fairly a number of specialised distance metrics, however these are much less generally applied “out of the field.” Many vector databases permit for customized distance metrics to be plugged into the system.

Which distance measure must you select? Usually, the documentation for an embedding mannequin will say what to make use of—you must observe such recommendation. In any other case, Euclidean is an effective place to begin, except you could have particular causes to assume in any other case. It could be price experimenting with completely different distance measures to see which one works finest in your software.

With out some intelligent tips, to seek out the closest level in embedding area, within the worst case, the database would want to calculate the gap measure between a goal vector and each different vector within the system, then kind the ensuing record. This shortly will get out of hand as the dimensions of the database grows. In consequence, all production-level databases embrace approximate nearest neighbor (ANN) algorithms. These commerce off a tiny little bit of accuracy for significantly better efficiency. Analysis into ANN algorithms stays a sizzling matter, and a robust implementation of 1 is usually a key issue within the alternative of a vector database.

Choosing a Vector Database

Now that we’ve mentioned a few of the key components that vector databases assist–storing embeddings and computing vector similarity–how must you go about deciding on a database in your app?

Search efficiency, measured by the point wanted to resolve queries in opposition to vector indexes, is a main consideration right here. It’s price understanding how a database implements approximate nearest neighbor indexing and matching, since this can have an effect on the efficiency and scale of your software. But additionally examine replace efficiency, the latency between including new vectors and having them seem within the outcomes. Querying and ingesting vector knowledge on the similar time might have efficiency implications as nicely, so be sure you check this in the event you anticipate to do each concurrently.

Have a good suggestion of the dimensions of your challenge and how briskly you anticipate your customers and vector knowledge to develop. What number of embeddings are you going to want to retailer? Billion-scale vector search is actually possible in the present day. Can your vector database scale to deal with the QPS necessities of your software? Does efficiency degrade as the dimensions of the vector knowledge will increase? Whereas it issues much less what database is used for prototyping, it would be best to give deeper consideration to what it could take to get your vector search app into manufacturing.

Vector search purposes typically want metadata filtering as nicely, so it’s a good suggestion to know how that filtering is carried out, and the way environment friendly it’s, when researching vector databases. Does the database pre-filter, post-filter or search and filter in a single step with a view to filter vector search outcomes utilizing metadata? Totally different approaches can have completely different implications for the effectivity of your vector search.

One factor typically neglected about vector databases is that in addition they have to be good databases! Those who do a great job dealing with content material and metadata on the required scale must be on the prime of your record. Your evaluation wants to incorporate considerations widespread to all databases, reminiscent of entry controls, ease of administration, reliability and availability, and working prices.

Conclusion

Most likely the most typical use case in the present day for vector databases is complementing Massive Language Fashions (LLMs) as a part of an AI-driven workflow. These are highly effective instruments, for which the trade is simply scratching the floor of what’s potential. Be warned: This superb know-how is more likely to encourage you with contemporary concepts about new purposes and prospects in your search stack and your enterprise.

Learn the way Rockset helps vector search right here.