Giant Language Fashions (LLMs) like Anthropic’s Claude have unlocked large context home windows (as much as 200k tokens in Claude 4) that permit them take into account whole paperwork or codebases in a single go. Nevertheless, successfully offering related context to those fashions stays a problem. Historically, builders have resorted to advanced immediate engineering or retrieval pipelines to feed exterior data into an LLM’s immediate. Anthropic’s Mannequin Context Protocol (MCP) is a brand new open commonplace that simplifies and standardizes this course of.

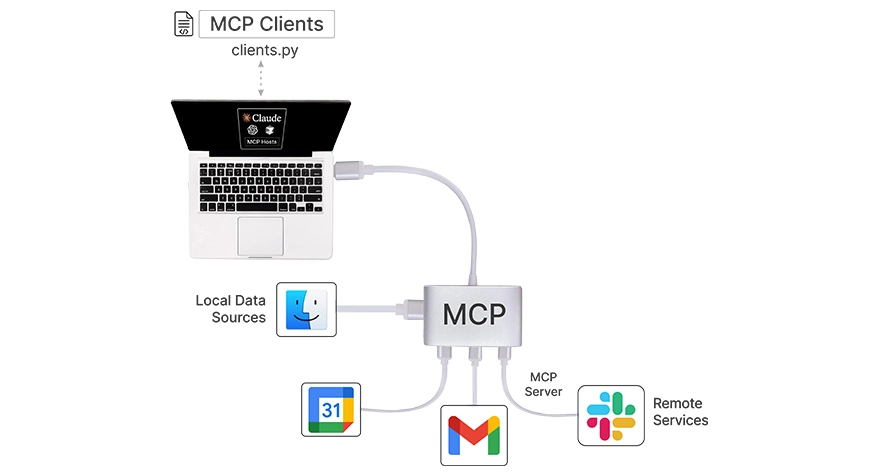

Consider MCP because the “USB-C for AI functions” – a common connector that lets your LLM seamlessly entry exterior information, instruments, and techniques. On this article, we’ll clarify what MCP is, why it’s essential for long-context LLMs, the way it compares to conventional immediate engineering, and stroll by way of constructing a easy MCP-compatible context server in Python. We’ll additionally focus on sensible use instances (like retrieval-augmented technology (RAG) and agent instruments) and supply code examples, diagrams, and references to start with MCP and Claude.

What’s MCP and Why Does It Matter?

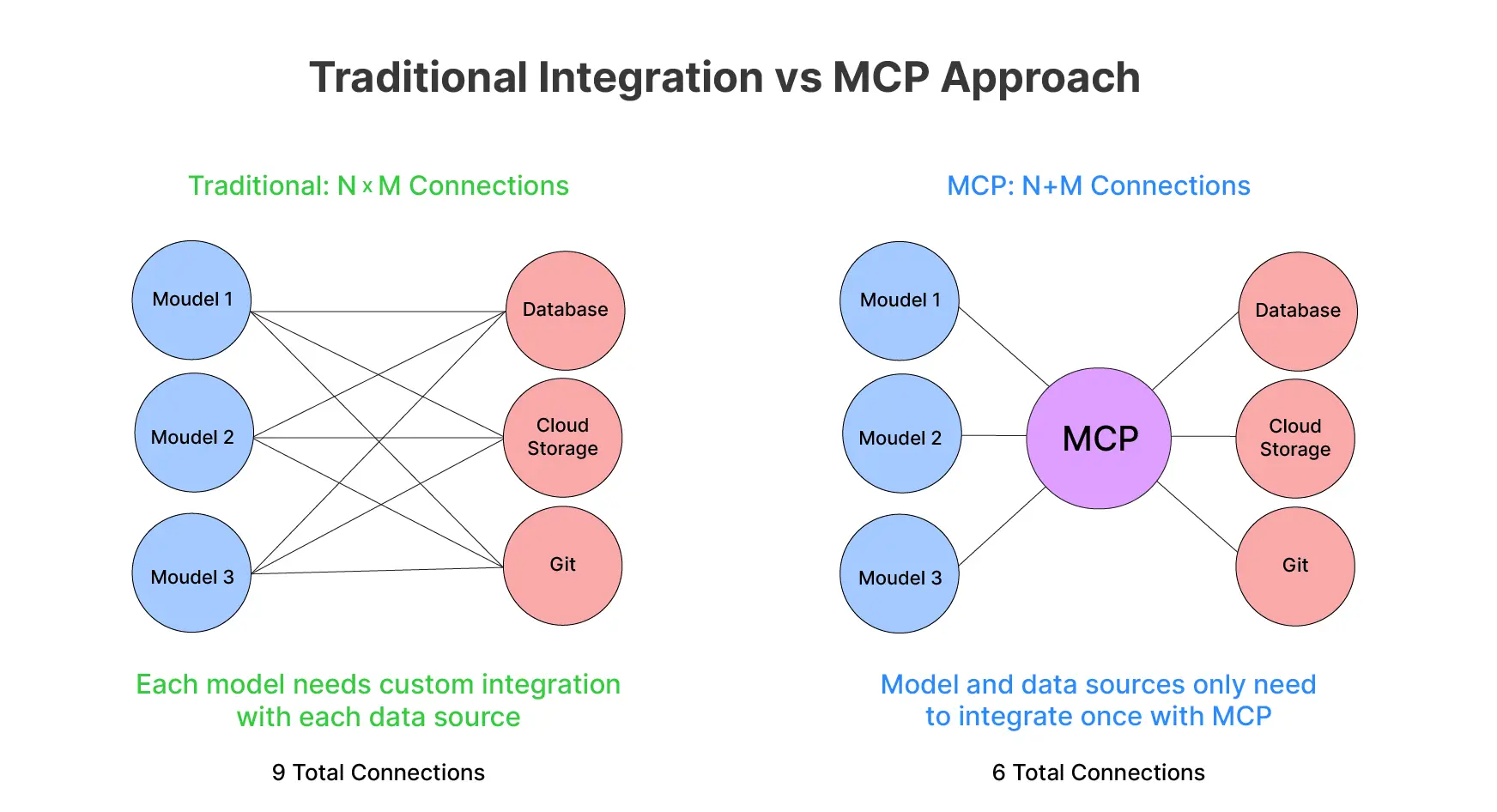

Mannequin Context Protocol is an open protocol that Anthropic launched in late 2024. It’s meant to standardize how AI functions present context to LLMs. In essence, MCP defines a typical consumer–server structure for connecting AI assistants to the locations the place your information lives. This helps with each native information, databases, cloud providers, in addition to enterprise functions. Earlier than MCP, integrating an LLM with every new information supply or API meant writing a customized connector or immediate logic for every particular case. This led to a combinatorial explosion of integrations: M AI functions instances N information sources may require M×N bespoke implementations. MCP tackles this by offering a common interface. With this, any compliant AI consumer can speak to any compliant information/service server. This reduces the issue to M + N integration factors.

Why is MCP particularly essential for long-context LLMs? Fashions like Claude 4 can ingest a whole lot of pages of textual content. Although deciding what data to place into that vast context window is non-trivial. Merely stuffing all doubtlessly related information into the immediate is inefficient and typically not possible. Mannequin Context Protocol allows a better method. The LLM or its host software can dynamically retrieve just-in-time context from exterior sources as wanted. That is finished as an alternative of front-loading the whole lot. This implies you possibly can leverage the total breadth of a 200k-token window with related information fetched on the fly. For instance, pulling in solely the sections of a data base that relate to the person’s question. MCP offers a structured, real-time method to preserve and increase the mannequin’s context with exterior data.

In brief, as AI assistants develop in context size, MCP ensures they don’t seem to be “trapped behind data silos.” As an alternative, these can entry up-to-date information, information, and instruments to floor their responses.

MCP vs. Conventional Immediate Engineering

Earlier than MCP, builders typically used RA) pipelines or guide immediate engineering to inject exterior data into an LLM’s immediate. For instance, a RAG system may vector-search a doc database for related textual content. It could then insert these snippets into the immediate as context. Alternatively, one may craft a monolithic immediate containing directions, examples, and appended information. These approaches work, however they’re advert hoc and lack standardization.

Every software finally ends up reinventing fetch and format context for the mannequin, and integrating new information sources means writing new glue code or prompts.

MCP Primitives

Mannequin Context Protocol essentially modifications this by introducing structured context administration. As an alternative of treating all exterior information as simply extra immediate textual content, MCP breaks down interactions into three standardized elements (or “primitives”):

- Assets – consider these as read-only context items (information sources) supplied to the mannequin. A useful resource is perhaps a file’s contents, a database document, or an API response that the mannequin can learn. Assets are application-controlled. The host or developer decides what information to reveal and the way. Importantly, studying a useful resource has no unintended effects – it’s analogous to a GET request that simply fetches information. Assets provide the content material that may be injected into the mannequin’s context when wanted (e.g., retrieved paperwork in a Q&A situation).

- Instruments – these are actions or capabilities the LLM can invoke to carry out operations, corresponding to operating a computation or calling an exterior API. Instruments are model-controlled. This implies the AI decides if and when to make use of them (just like operate calling in different frameworks). For instance, a software might be “send_email(recipient, physique)” or “query_database(SQL)”. Utilizing a software might have unintended effects (sending information, modifying state), and the results of a software name will be fed again into the dialog.

- Prompts – these are reusable immediate templates or directions which you could invoke as wanted. They’re user-controlled or predefined by builders. Prompts may embody templates for frequent duties or guided workflows (e.g., a template for code overview or a Q&A format). Primarily, they supply a method to persistently inject sure directions or context phrasing with out hardcoding it into each immediate.

Completely different from Conventional Immediate Engineering

This structured method contrasts with conventional immediate engineering. In that, all context (directions, information, software hints) might lump into one massive immediate. With MCP, context is modular. An AI assistant can uncover what sources and instruments can be found after which flexibly mix them. So, MCP turns an unstructured immediate right into a two-way dialog between the LLM and your information/instruments. The mannequin isn’t blindly handed a block of textual content. As an alternative, it could actively request information or actions by way of an ordinary protocol.

Furthermore, MCP makes integrations constant and scalable. Because the USB analogy suggests, an MCP-compliant server for (say) Google Drive or Slack can plug into any MCP-aware consumer (Claude, an IDE plugin, and so on.). Builders don’t have to write down new immediate logic for every app-tool combo. This standardization additionally facilitates neighborhood sharing: you possibly can leverage pre-built MCP connectors as an alternative of reinventing them. Anthropic has open-sourced many MCP servers for frequent techniques. These embody file techniques, GitHub, Slack, databases, and so on., which you’ll reuse or study from. In abstract, MCP affords a unified and modular method to provide context and capabilities to LLMs.

MCP Structure and Knowledge Circulation

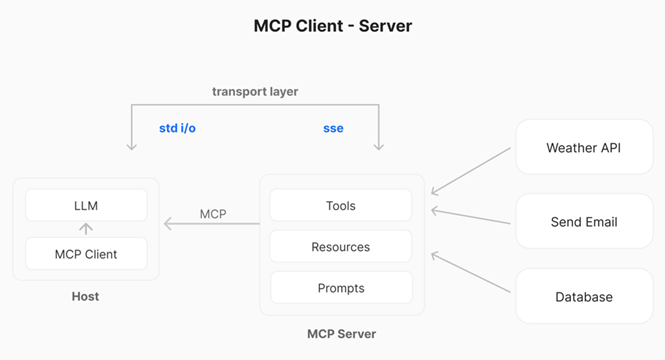

At a excessive degree, Mannequin Context Protocol follows a consumer–server structure inside an AI software. Let’s break down the important thing elements and the way they work together:

Host

The host is the primary AI software or interface that the end-user interacts with. This is usually a chatbot UI (e.g., Claude’s chat app or a customized internet app). Or it may be an IDE extension, or any “AI assistant” surroundings. The host incorporates or invokes the LLM itself. As an example, Claude Desktop is a number – it’s an app the place Claude (the LLM) converses with the person.

MCP Consumer

The MCP consumer is a element (typically a library) operating throughout the host software. It manages the connection to a number of MCP servers. You may consider the consumer as an adapter or intermediary. It speaks the MCP protocol, dealing with messaging, requests, and responses. Every MCP consumer sometimes handles one server connection. So, if the host connects to a number of information sources, it’ll instantiate a number of shoppers). In apply, the consumer is accountable for discovering server capabilities. It sends the LLM’s requests to the server and relays responses again.

MCP Server

The server is an exterior (or native) program that wraps a selected information supply or performance behind the MCP commonplace. The server “exposes” a set of Instruments, Assets, and Prompts in accordance with the MCP spec. For instance, a server may expose your file system (permitting the LLM to learn information as sources). Or a CRM database, or a third-party API like climate or Slack. The server handles incoming requests (like “learn this useful resource” or “execute this software”). It then returns leads to a format the consumer and LLM can perceive.

These elements talk by way of an outlined transport layer. MCP helps a number of transports. For native servers, a easy STDIO pipe can be utilized. Consumer and server on the identical machine talk by way of commonplace enter/output streams. For distant servers, MCP makes use of HTTP with Server-Despatched Occasions (SSE) to take care of a persistent connection. MCP libraries summary away the transport particulars, nevertheless it’s helpful to know that native integrations are potential with none community. And that distant integrations work over internet protocols.

Knowledge movement in MCP

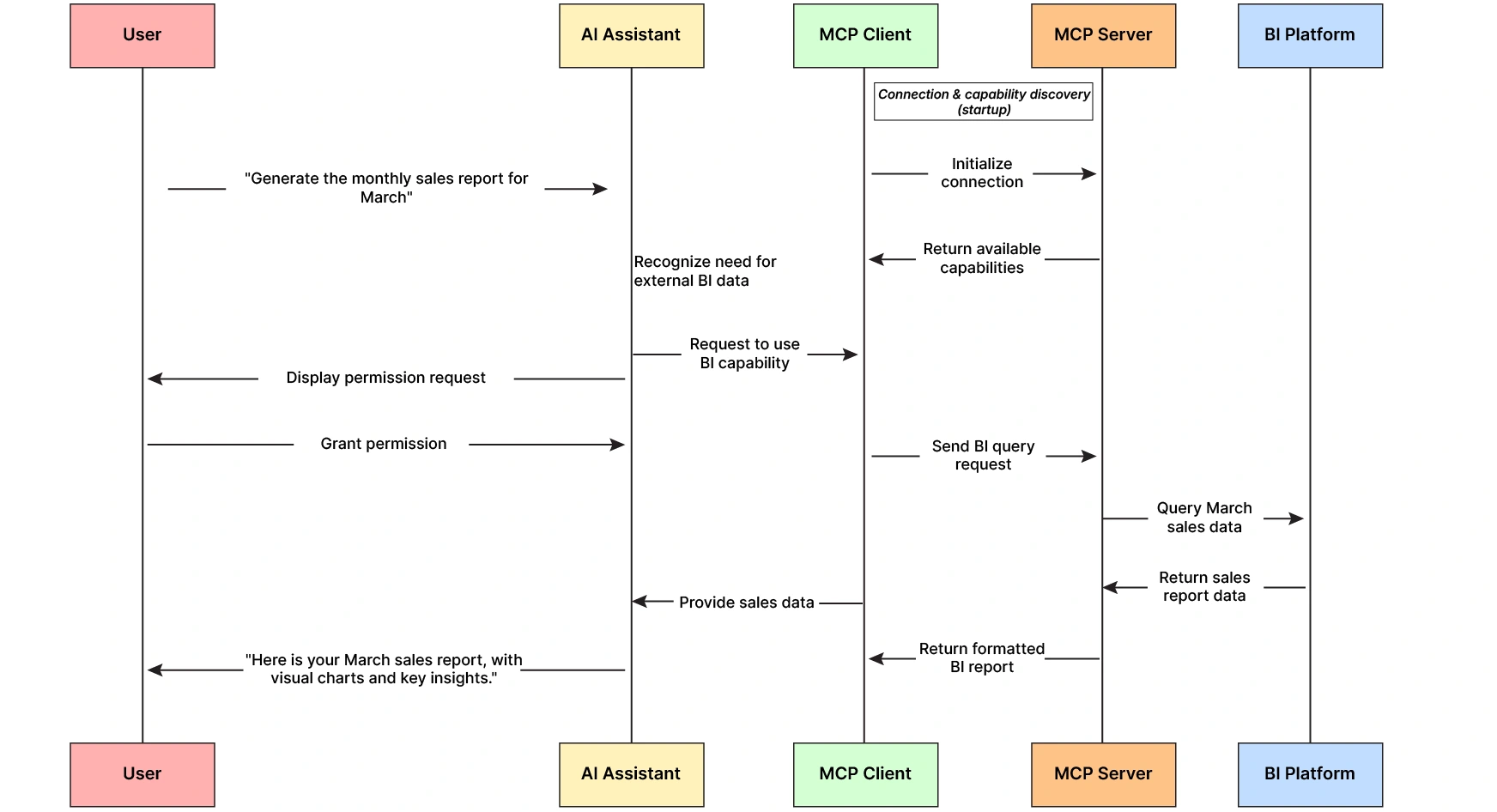

As soon as the whole lot is ready up, the interplay follows a sequence every time the person engages with the AI assistant:

- Initialization & Handshake – When the host software begins or when a brand new server is added, the MCP consumer establishes a connection to the server. They carry out a handshake to confirm protocol variations and alternate primary information. This ensures either side communicate the identical MCP model and perceive one another’s messages.

- Functionality Discovery – After connecting, the consumer asks the server what it could do. The server responds with a listing of obtainable instruments, sources, and immediate templates (together with descriptions, parameter schemas, and so on.). For instance, a server may report: “I’ve a useful resource ‘file://{path}’ for studying information, a software ‘get_weather(lat, lan)’ for fetching climate, and a immediate template ‘summarize(textual content).” The host can use this to current choices to the person or inform the LLM about accessible capabilities.

- Context Provisioning – The host can proactively fetch some sources or select immediate templates to reinforce the mannequin’s context at first of a dialog. As an example, an IDE may use an MCP server to load the person’s present file as a useful resource and embody its content material in Claude’s context routinely. Or the host may apply a immediate template (like a selected system instruction) earlier than the LLM begins producing. At this stage, the host primarily injects preliminary context from MCP sources/prompts into the LLM’s enter.

- LLM Invocation & Instrument Use – The person’s question, together with any preliminary context, is given to the LLM. Because the LLM processes the question, it could determine to invoke one of many accessible MCP Instruments if wanted. For instance, if the person asks “What are the open points in repo X?”, the mannequin may decide it must name a get_github_issues(repo) software supplied by a GitHub MCP server. When the mannequin “decides” to make use of a software, the host’s MCP consumer receives that operate name request (that is analogous to function-calling in different LLM APIs). The consumer then sends the invocation to the MCP server accountable.

- Exterior Motion Execution – The MCP server receives the software invocation, acts by interfacing with the exterior system (e.g., calling GitHub’s API), after which returns the consequence. In our instance, it would return a listing of problem titles.

- Response Integration – The MCP consumer receives the consequence and passes it again to the host/LLM. Sometimes, the result’s integrated into the LLM’s context as if the mannequin had “seen” it. Persevering with the instance, the record of problem titles can finish the dialog (typically as a system or assistant message containing the software’s output). The LLM now has the info it fetched and may use it to formulate a last reply.

- Remaining Reply Technology – With related exterior information in context, the LLM generates its reply to the person. From the person’s perspective, the assistant answered utilizing real-time data or actions, however due to MCP, the method was standardized and safe.

Crucially, Mannequin Context Protocol enforces safety and person management all through this movement. No software or useful resource is used with out specific permission. As an example, Claude’s implementation of MCP in Claude Desktop requires the person to approve every server and may immediate earlier than sure delicate operations. Most MCP servers run regionally or throughout the person’s infrastructure by default, retaining information non-public except you explicitly permit a distant connection. All of this ensures that giving an LLM entry to, say, your file system or database by way of MCP doesn’t flip right into a free-for-all; you preserve management over what it could see or do.

Constructing a Easy MCP Context Server in Python (Step-by-Step)

One of many nice issues about Mannequin Context Protocol being an open commonplace is which you could implement servers in lots of languages. Anthropic and the neighborhood present SDKs in Python, TypeScript, Java, Kotlin, C#, and extra. Right here, we’ll deal with Python and construct a easy MCP-compatible server for instance outline and use context items (sources) and instruments. We assume you’ve got Python 3.9+ accessible.

Observe: This tutorial makes use of in-memory information constructions to simulate real-world conduct. The instance requires no exterior dataset.

Step 1: Setup and Set up

First, you’ll want an MCP library. You may set up Anthropic’s official Python SDK (mcp library) by way of pip. There’s additionally a high-level helper library known as FastMCP that makes constructing servers simpler (it’s a well-liked neighborhood SDK). For this information, let’s use fastmcp for brevity. You may set up it with:

pip set up fastmcp(Alternatively, you would use the official SDK equally. The ideas stay the identical.)

Step 2: Outline an MCP Server and Context Models

An MCP server is basically a program that declares some instruments/sources and waits for consumer requests. Let’s create a easy server that gives two capabilities for instance MCP’s context-building:

- A Useful resource that gives the content material of an “article” by ID – simulating a data base lookup. This may act as a context unit (some textual content information) the mannequin can retrieve.

- A Instrument that provides two numbers – a trivial instance of a operate the mannequin can name (simply to point out software utilization).

from fastmcp import FastMCP

# Initialize the MCP server with a reputation

mcp = FastMCP("DemoServer")

# Instance information supply for our useful resource

ARTICLES = {

"1": "Anthropic's Claude is an AI assistant with a 100K token context window and superior reasoning talents.",

"2": "MCP (Mannequin Context Protocol) is an ordinary to attach AI fashions with exterior instruments and information in a unified means.",

}

# Outline a Useful resource (context unit) that gives an article's textual content by ID @mcp.useful resource("article://{article_id}")

def get_article(article_id: str) -> str:

"""Retrieve the content material of an article by ID."""

return ARTICLES.get(article_id, "Article not discovered.")

# Outline a Instrument (operate) that the mannequin can name @mcp.software()

def add(a: int, b: int) -> int:

"""Add two numbers and return the consequence."""

return a + b

# (Non-compulsory) Outline a Immediate template for demonstration @mcp.immediate()

def how_to_use() -> str:

"""A immediate template that instructs the assistant on utilizing this server."""

return "You have got entry to a DemoServer with an 'article' useful resource and an 'add' software."

if title=="important":

# Run the server utilizing commonplace I/O transport (appropriate for native consumer connection)

mcp.run(transport="stdio")Let’s break down what’s occurring right here:

- We create a FastMCP server occasion with the title “DemoServer”. The shoppers use the title to seek advice from this server.

- We outline a dictionary ARTICLES to simulate a small data base. In actual situations, database queries or API calls can change this, however for now, it’s simply in-memory information.

- The @mcp.useful resource(“article://{article_id}”) decorator exposes the get_article operate as a Useful resource. The string “article://{article_id}” is a URI template indicating how this useful resource is accessed. MCP shoppers will see that this server affords a useful resource with the schema article://… and may request, for instance, article:// 1. When known as, get_article returns a string (the article textual content). This textual content is the context unit that will be delivered to the LLM. Discover there aren’t any unintended effects – it’s a read-only retrieval of information.

- The @mcp_tool decorator exposes an add a Instrument. It takes two integers and returns their sum. It’s a trivial instance simply for instance a software; an actual software may act like hitting an exterior API or modifying one thing. The essential half is that the mannequin’s alternative invokes the instruments and these can have unintended effects.

- We additionally confirmed an @mcp_prompt() for completeness. This defines a Immediate template that may present preset directions. On this case, how_to_use returns a hard and fast instruction string. Immediate items may help information the mannequin (as an illustration, with utilization examples or formatting), however they’re elective. The person may choose them earlier than the mannequin runs.

- Lastly, mcprun(transport=”stdio”) begins the server and waits for a consumer connection, speaking over commonplace I/O. If we needed to run this as a standalone HTTP server, we may use a unique transport (like HTTP with SSE), however stdio is ideal for a neighborhood context server that, say, Claude Desktop can launch in your machine.

Step 3: Working the Server and Connecting a Consumer

To check our Mannequin Context Protocol server, we want an MCP consumer (for instance, Claude). One easy means is to make use of Claude’s desktop software, which helps native MCP servers out of the field. In Claude’s settings, you would add a configuration pointing to our demo_server.py. It will look one thing like this in Claude’s config file (pseudo-code for illustration):

JSON

{

"mcpServers":

{ "DemoServer":

{

"command": "python",

"args": ["/path/to/demo_server.py"]

}

}

}This tells Claude Desktop to launch our Python server when it begins (utilizing the given command and script path). As soon as operating, Claude will carry out the handshake and discovery. Our server will promote that it has an article://{id} useful resource, an add software, and a immediate template.

When you’re utilizing the Anthropic API as an alternative of Claude’s UI, Anthropic offers an MCP connector in its API. Right here you possibly can specify an MCP server to make use of throughout a dialog. Primarily, you’d configure the API request to incorporate the server (or its capabilities). This helps Claude know it could name these instruments or fetch these sources.

Step 4: Utilizing the Context Models and Instruments

Now, with the server linked, how does it get utilized in a dialog? Let’s stroll by way of two situations:

Utilizing the Useful resource (Retrieval)

Suppose the person asks Claude, “What’s Anthropic’s MCP in easy phrases?” As a result of we’ve got an article useful resource which may include the reply, Claude (or the host software logic) can fetch that context. One method is that the host may proactively name (since article 2 in our information is about MCP) and supply its content material to Claude as context. Alternatively, if Claude is ready as much as motive about accessible sources, it would internally ask for article://2 after analyzing the query.

In both case, the DemoServer will obtain a learn request for article://2, and return: “MCP (Mannequin Context Protocol) is an ordinary to attach AI fashions with exterior instruments and information in a unified means.” The Claude mannequin then sees textual content as extra context and may use it to formulate a concise reply for the person. Primarily, the article useful resource served as a context unit – a chunk of information injected into the immediate at runtime fairly than being a part of Claude’s fastened coaching information or a manually crafted immediate.

Utilizing the Instrument (Perform Name)

Now, think about the person asks: “What’s 2 + 5? Additionally, clarify MCP.” Claude may actually do (2+5) by itself, however since we gave it an add software, it would determine to make use of it. Throughout technology, the mannequin points a operate name: add(2, 5). The MCP consumer intercepts this and routes it to our server. The add operate executes (returning 7), and the result’s despatched again. Claude then will get the consequence (maybe as one thing like: Instrument returned: 7 within the context) and may proceed to reply the query.

This can be a trivial math instance, nevertheless it demonstrates how the LLM can leverage exterior instruments by way of MCP. In additional real looking situations, instruments might be issues like search_documents(question) or send_email(to, content material) – i.e., agent-like capabilities. MCP permits these to be cleanly built-in and safely sandboxed (the software runs in our server code, not contained in the mannequin, so we’ve got full management over what it could do).

Step 5: Testing and Iterating

When creating your personal MCP server, it’s essential to check that the LLM can use it as anticipated. Anthropic offers an MCP Inspector software for debugging servers, and you’ll all the time use logs to see the request/response movement. For instance, operating our demo_server.py immediately will doubtless look forward to enter (because it expects an MCP consumer). As an alternative, you would write a small script utilizing the MCP library’s consumer functionalities to simulate a consumer request. However in case you have Claude Desktop, right here is an easy take a look at – join the server. Then in Claude’s chat, ask one thing that triggers your useful resource or software. Test Claude’s dialog or the logs to confirm that it fetched the info.

Tip: When Claude Desktop connects to your server, you possibly can click on on the “Instruments” or “Assets” panel to see in case your get_article and add functionalities are listed. If not, double-check your configuration and that the server began appropriately. For troubleshooting, Anthropic’s docs counsel enabling verbose logs in Claude. You may even use Chrome DevTools within the desktop app to examine the MCP messages. This degree of element may help guarantee your context server works easily.

Sensible Use Instances of MCP

Now that we’ve seen how Mannequin Context Protocol works in precept, let’s focus on some sensible functions related to builders:

Retrieval-Augmented Technology (RAG) with MCP

One of the apparent use instances for MCP is bettering LLM responses with exterior data – i.e., RAG. As an alternative of utilizing a separate retrieval pipeline and manually stuffing the consequence into the immediate, you possibly can create an MCP server that interfaces along with your data repository. For instance, you would construct a “Docs Server” that connects to your organization’s Confluence or a vector database of paperwork. This server may expose a search software (e.g., search_docs(question) –> record[doc_id]) and a useful resource (e.g., doc://{doc_id} to get the content material).

When a person asks one thing, Claude can name search_docs by way of MCP to seek out related paperwork (maybe utilizing embeddings underneath the hood), then name the doc://… useful resource to retrieve the total textual content of these high paperwork. These texts get fed into Claude’s context, and Claude can reply with direct quotes or up-to-date information from the docs. All of this occurs by way of the standardized protocol. This implies if you happen to later change to a unique LLM that helps MCP, or use a unique consumer interface, your docs server nonetheless works the identical.

In truth, many early adopters have finished precisely this: hooking up data bases and information shops. Anthropic’s launch talked about organizations like Block and startups like Supply graph and Replit working with MCP to let AI brokers retrieve code context, documentation, and extra from their present techniques. The profit is obvious: enhanced context consciousness for the mannequin results in rather more correct and related solutions. As an alternative of an assistant that solely is aware of as much as its coaching cut-off (and hallucinates latest information), you get an assistant that may. For instance, pull the most recent product specs out of your database or the person’s private information (with permission) to present a tailored reply. In brief, MCP supercharges long-context fashions. It ensures they all the time have the fitting context readily available, not simply numerous contexts.

Agent Actions and Instrument Use

Past static information retrieval, Mannequin Context Protocol can also be constructed to assist agentic conduct, the place an LLM can carry out actions within the outdoors world. With MCP Instruments, you can provide the mannequin the power to do issues like: ship messages, create GitHub points, run code, or management IoT units (the probabilities are countless, constrained solely by what instruments you expose). The bottom line is that MCP offers a protected, structured framework for this. Every software has an outlined interface and requires person opt-in. This mitigates the dangers of letting an AI run arbitrary operations as a result of, as a developer, you explicitly outline what’s allowed.

Contemplate a coding assistant built-in into your IDE. Utilizing MCP, it would connect with a Git server and a testing framework. The assistant may have a software run_tests() and one other git_commit(message). Whenever you ask it to implement a function, it may write code (throughout the IDE), then determine to name run_tests() by way of MCP to execute the take a look at suite, get the outcomes, and if all is nice, name git_commit() to commit the modifications. MCP connectors facilitate all these steps (for the take a look at runner and Git). The IDE (host) mediates the method, guaranteeing you approve it. This isn’t hypothetical – builders are actively engaged on such agent integrations. As an example, the workforce behind Zed (a code editor) and different IDE plugins has been working with MCP to permit AI assistants to raised perceive and navigate coding duties.

One other instance: a buyer assist chatbot may have instruments to reset a person’s password or retrieve their order standing (by way of MCP servers linked to inside APIs). The AI may seamlessly deal with a assist request end-to-end: wanting up the order (learn useful resource), and initiating a refund (software motion), all whereas logging the actions. MCP’s standardized logging and safety mannequin helps right here – e.g., it may require specific affirmation earlier than executing one thing like a refund, and all occasions undergo a unified pipeline for monitoring.

The agent paradigm turns into way more strong with Mannequin Context Protocol as a result of any AI agent framework can leverage the identical set of instruments. Notably, even OpenAI has introduced plans to assist MCP, indicating it would develop into a cross-platform commonplace for plugin-like performance. This implies an funding in constructing an MCP server in your software or service may let a number of AI platforms (Claude, doubtlessly ChatGPT, and so on.) use it. The LLM tooling ecosystem thus converges in direction of a typical floor, benefiting builders with extra reuse and customers with extra highly effective AI assistants.

Multi-Modal and Complicated Workflows

Mannequin Context Protocol isn’t restricted to text-based information. Assets will be binary or different codecs too (they’ve MIME sorts). You can serve photos or audio information as base64 strings or information streams by way of a useful resource, and have the LLM analyze them if it has that functionality, or go them to a unique mannequin. For instance, an MCP server may expose a person’s picture assortment – the mannequin may retrieve a photograph by filename as a useful resource, then use one other software at hand it off to a picture captioning service, after which use that caption within the dialog.

Moreover, MCP has an idea of Prompts (as we briefly added in code), which permits for extra advanced multi-step workflows. A immediate template may information the mannequin by way of utilizing sure instruments in a selected sequence. As an example, a “Doc Q&A” immediate may instruct the mannequin: “First, search the docs for related information utilizing the search_docs software. Then use the doc:// useful resource to learn the highest consequence.

Lastly, reply the query citing that information.” This immediate might be one of many templates the server affords, and a person may explicitly invoke it for a activity (or the host auto-selects it primarily based on context). Whereas not strictly needed, immediate items present one other lever to make sure the mannequin makes use of the accessible instruments and context successfully.

Finest Practices, Advantages, and Subsequent Steps

Growing with Mannequin Context Protocol does introduce a little bit of an preliminary studying curve (as any new framework does). Although it pays off with vital advantages:

- Standardized Integrations – You write your connector as soon as, and it could work with any MCP- MCP-compatible AI. This reduces duplicate effort and makes your context/instruments simply shareable. For instance, as an alternative of separate code to combine Slack with every of your AI apps, you possibly can have one Slack MCP server and use it in all places.

- Enhanced Context and Accuracy – By bringing real-time, structured context into the LLM’s world, you get way more correct and present outputs. No extra hallucinating a solution that’s in your database – the mannequin can simply question the database by way of MCP and get the reality.

- Modularity and Maintainability – MCP encourages a transparent separation of issues. Your “context logic” lives in MCP servers. You may independently develop and take a look at this, even with unit assessments for every software/useful resource. Your core software logic stays clear. This modular design makes it simpler to replace one half with out breaking the whole lot. It’s analogous to how microservices modularize backend techniques.

- Safety and Management – Due to MCP’s local-first design and specific permission mannequin , you’ve got tight management over what the AI can entry. You may run all servers on-premises, retaining delicate information in-house. Every software name will be logged and should even require person affirmation. That is important for enterprise adoption, the place information governance is a priority.

- Future-Proofing – Because the AI ecosystem evolves, having an open protocol means you aren’t locked into one vendor’s proprietary plugin system. Anthropic has open-sourced the MCP spec and supplied detailed documentation, and a neighborhood is rising round it. It’s not onerous to think about MCP (or one thing very very similar to it) changing into the de facto means AI brokers’ interface with the world. Getting on board now may put you forward of the curve.

When it comes to subsequent steps, listed here are some recommendations for MCP:

- Test Out Official Assets – Learn the official MCP specification and documentation to get a deeper understanding of all message sorts and options (for instance, superior matters just like the sampling mechanism, the place a server can ask the mannequin to finish textual content, which we didn’t cowl right here). The spec is well-written and covers the protocol in depth.

- Discover SDKs and Examples – The MCP GitHub group has SDKs and a repository of instance servers. As an example, you’ll find reference implementations for frequent integrations (filesystem, Git, Slack, database connectors, and so on.) and community-contributed servers for a lot of different providers. These are nice for studying by instance and even utilizing out-of-the-box.

- Strive Claude with MCP – In case you have entry to Claude (both the desktop app or by way of API with Claude 4 or Claude-instant), attempt enabling an MCP server and see the way it enhances your workflow. Anthropic’s QuickStart information may help you arrange your first server. Claude 4 (particularly Claude Code and Claude for Work) was designed with these integrations in thoughts. So, it’s a very good sandbox to experiment in.

- Construct and Share – Contemplate constructing a small MCP server for a software or information supply you care about – possibly a Jira connector, a Spotify playlist reader, or a Gmail e-mail summarizer. It doesn’t must be advanced. Even the act of wrapping a easy API into MCP will be enlightening. And since MCP is open, you possibly can share your creation with others. Who is aware of, your MCP integration may fill a necessity for a lot of builders on the market.

Conclusion

Anthropic’s Mannequin Context Protocol represents a big step ahead in making LLMs context-aware and action-capable in a standardized, developer-friendly means. By separating context provision and power use into a proper protocol, MCP frees us from brittle immediate hacks and one-off integrations. As an alternative, we get a plug-and-play ecosystem the place AI fashions can fluidly connect with the identical wealth of information and providers our common software program can. Within the period of ever-longer context home windows, Mannequin Context Protocol is the plumbing that delivers the fitting data to fill these home windows successfully.

For builders, that is an thrilling area to dive into. We’ve solely scratched the floor with a easy demo, however you possibly can think about the probabilities once you mix a number of MCP servers – your AI assistant may concurrently pull data from a documentation wiki, work together along with your calendar, and management IoT units, multi functional dialog. And since it’s all standardized, you spend much less time wrangling prompts and extra time constructing cool options.

We encourage you to experiment with MCP and Claude: check out the instance servers, construct your personal, and combine them into your AI initiatives. As an open commonplace backed by a serious AI lab and rising neighborhood, MCP may develop into a cornerstone of how we construct AI functions, very similar to how USB grew to become ubiquitous for machine connectivity. By getting concerned early, you possibly can assist form this ecosystem and guarantee your functions are on the slicing fringe of context-aware AI.

References & Additional Studying: For extra data, see Anthropic’s official announcement and docs on MCP, the MCP spec and developer information on the Mannequin Context Protocol web site, and neighborhood articles that discover MCP in depth (e.g., by Phil Schmid and Humanloop). Blissful hacking with MCP, and should your AI apps by no means run out of context!

Login to proceed studying and revel in expert-curated content material.