Migrating your knowledge warehouse workloads is without doubt one of the most difficult but important duties for any group. Whether or not the motivation is the expansion of your enterprise and scalability necessities or decreasing the excessive license and {hardware} value of your current legacy programs, migrating just isn’t so simple as transferring recordsdata. At Databricks, our Skilled Providers group (PS), has labored with tons of of shoppers and companions on migration initiatives and have a wealthy report of profitable migrations. This weblog put up will discover finest practices and classes discovered that any knowledge skilled ought to think about when scoping, designing, constructing, and executing a migration.

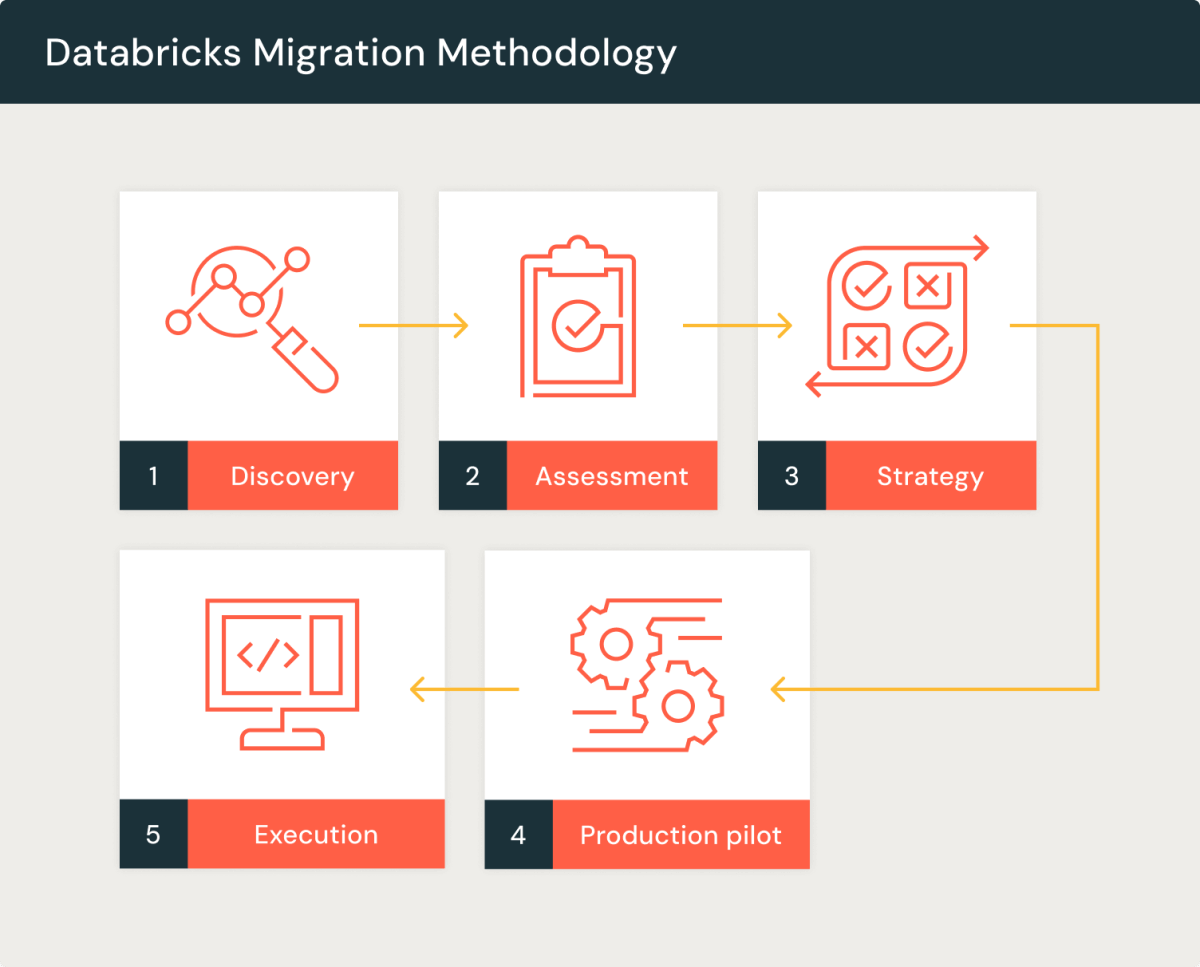

5 phases for a profitable migration

At Databricks, we have now developed a five-phase course of for our migration initiatives based mostly on our expertise and experience.

Earlier than beginning any migration mission, we start with the discovery section. Throughout this section, we purpose to know the explanations behind the migration and the challenges of the prevailing legacy system. We additionally spotlight the advantages of migrating workloads to the Databricks Knowledge Intelligence Platform. The invention section entails collaborative Q&A classes and architectural discussions with key stakeholders from the client, Databricks. Moreover, we use an automatic discovery profiler to realize insights into the legacy workloads and estimate the consumption prices of the Databricks Platform to calculate TCO discount.

After finishing the invention section, we transfer on to a extra in-depth evaluation. Throughout this stage, we make the most of automated analyzers to guage the complexity of the prevailing code and acquire a high-level estimate of the trouble and value required. This course of gives useful insights into the structure of the present knowledge platform and the purposes it helps. It additionally helps us refine the scope of the migration, get rid of outdated tables, pipelines, and jobs, and start contemplating the goal structure.

Within the migration technique and design section, we’ll finalize the small print of the goal structure and the detailed design for knowledge migration, ETL, saved process code translation, and Report and BI modernization. At this stage, we will even map out the know-how between the supply and goal property. As soon as we have now finalized the migration technique, together with the goal structure, migration patterns, toolings, and chosen supply companions, Databricks PS, together with the chosen SI associate, will put together a migration Assertion of Work (SOW) for the Pilot (Part I) or a number of phases for the mission. Databricks has a number of licensed Migration Brickbuilder SI companions who present automated tooling to make sure profitable migrations. Moreover, Databricks Skilled Providers can present Migration Assurance companies together with an SI associate.

After the assertion of labor (SOW) is signed, Databricks Skilled Providers (PS) or the chosen Supply Companion carries out a manufacturing pilot section. On this section, a clearly outlined end-to-end use case is migrated to Databricks from the legacy platform. The information, code, and stories are modernized to Databricks utilizing automated instruments and code converter accelerators. Finest practices are documented, and a Dash retrospective captures all the teachings discovered to establish areas for enchancment. A Databricks onboarding information is created to function the blueprint for the remaining phases, that are sometimes executed in parallel sprints utilizing agile Scrum groups.

Lastly, we progress to the full-fledged Migration execution section. We repeat our pilot execution strategy, integrating all the teachings discovered. This helps in establishing a Databricks Heart of Excellence (CoE) throughout the group and scaling the groups by collaborating with buyer groups, licensed SI companions, and our Skilled Providers group to make sure migration experience and success.

Classes discovered

Assume Large, Begin Small

It is essential through the technique section to completely perceive your enterprise’s knowledge panorama. Equally essential is to check a couple of particular end-to-end use instances through the manufacturing pilot section. Irrespective of how effectively you intend, some points could solely come up throughout implementation. It is higher to face them early to seek out options. An effective way to decide on a pilot use case is to begin with the top objective – for instance, decide a reporting dashboard that is essential for your enterprise, determine the info and processes wanted to create it, after which attempt creating the identical dashboard in your goal platform as a check. This gives you a good suggestion of what the migration course of will contain.

Automate the invention section

We start by utilizing questionnaires and interviewing the database directors to know the scope of the migration. Moreover, our automated platform profilers scan by way of the info dictionaries of databases and hadoop system metadata to offer us with precise data-driven numbers on CPU utilizations, % ETL vs % BI utilization, utilization patterns by varied customers, and repair principals. This info may be very helpful in estimating the Databricks prices and the ensuing TCO Financial savings. Code complexity analyzers are additionally useful as they supply us with the variety of DDLs, DMLs, Saved procedures, and different ETL jobs to be migrated, together with their complexity classification. This helps us decide the migration prices and timelines.

Leverage Automated Code Converters

Using automated code conversion instruments is crucial to expedite migration and reduce bills. These instruments support in changing legacy code, resembling saved procedures or ETL, to Databricks SQL. This ensures that no enterprise guidelines or features applied within the legacy code are ignored as a result of lack of documentation. Moreover, the conversion course of sometimes saves builders over 80% of growth time, enabling them to promptly evaluate the transformed code, make needed changes, and give attention to unit testing. It’s essential to make sure that the automated tooling can convert not solely the database code but additionally the ETL code from legacy GUI-based platforms.

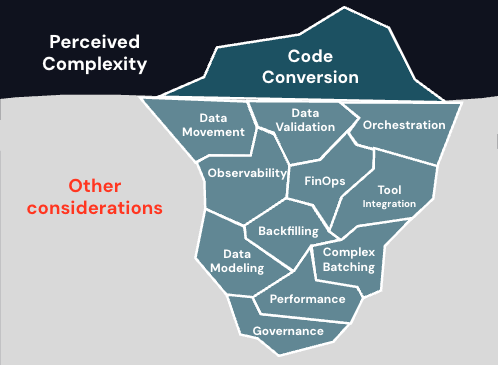

Past Code Conversion—Knowledge Issues Too

Migrations typically create a deceptive impression of a clearly outlined mission. Once we take into consideration migration, we often give attention to changing code from the supply engine to the goal. Nevertheless, it is essential to not overlook different particulars which are essential to make the brand new platform usable.

For instance, it’s essential to finalize the strategy for knowledge migration, much like code migration and conversion. Knowledge migration may be successfully achieved by utilizing Databricks LakeFlow Join the place relevant or by selecting considered one of our CDC Ingestion associate instruments. Initially, through the growth section, it might be needed to hold out historic and catch-up hundreds from the legacy EDW, whereas concurrently constructing the info ingestion from the precise sources to Databricks. Moreover, it is very important have a well-defined orchestration technique utilizing Databricks Workflows, Delta Dwell Tables, or comparable instruments. Moreover, your migrated knowledge platform ought to align together with your software program growth and CI/CD practices earlier than the migration is taken into account full.

Do not ignore governance and safety

Governance and safety are different parts which are typically ignored when designing and scoping a migration. No matter your current governance practices, we advocate utilizing the Unity Catalog at Databricks as your single supply of fact for centralized entry management, auditing, lineage, and knowledge discovery capabilities. Migrating and enabling the Unity Catalog will increase the trouble required for the whole migration. Additionally, discover the distinctive capabilities that a few of our Governance companions present.

Knowledge Validation and Person Testing is crucial for profitable migration

It’s essential for the success of the mission to have correct knowledge validation and lively participation from enterprise Topic Matter Consultants (SMEs) throughout Person Acceptance Testing section. The Databricks migration group and our licensed System Integrators (SIs) use parallel testing and knowledge reconciliation instruments to make sure that the info meets all the info high quality requirements with none discrepancies. Robust alignment with executives ensures well timed and centered participation of enterprise SMEs throughout user-acceptance testing, facilitating a fast transition to manufacturing and settlement on decommissioning older programs and stories as soon as the brand new system is in place.

Make It Actual – operationalize and observe your migration

Implement good operational finest practices, resembling knowledge high quality frameworks, exception dealing with, reprocessing, and knowledge pipeline observability controls, to seize and report course of metrics. It will assist establish and report any deviations or delays, permitting for rapid corrective actions. Databricks options like Lakehouse Monitoring and our system billing tables support in observability and FinOps monitoring.

Belief the consultants

Migrations may be difficult. There’ll at all times be tradeoffs to steadiness and sudden points and delays to handle. You want confirmed companions and options for the folks, course of, and know-how facets of the migration. We advocate trusting the consultants at Databricks Skilled Providers and our licensed migration companions, who’ve in depth expertise in delivering high-quality migration options in a well timed method. Attain out to get your migration evaluation began.