Seamless and safe entry to information has change into one of many greatest challenges going through organizations. Nowhere is that this extra evident than in technology-led exterior audits, the place analyzing 100% of transactional information is quick turning into the gold normal. These audits contain reviewing tens of billions of strains of economic and operational billing information.

To ship significant insights at scale, evaluation should not solely be sturdy but additionally environment friendly — balancing value, time, and high quality to realize the perfect outcomes in tight timeframes.

Not too long ago in collaboration with a significant UK power provider, KPMG leveraged Delta Sharing in Databricks to beat efficiency bottlenecks, enhance effectivity, and improve audit high quality. This weblog discusses our expertise, the important thing advantages, and the measurable impression on our audit course of from utilizing Delta Sharing.

The Enterprise Problem

To fulfill public monetary reporting deadlines, we would have liked to entry and analyze tens of billions of strains of the audited entity’s billing information inside a brief audit window.

Traditionally, we relied on the audited entity’s analytics surroundings hosted in AWS PostgreSQL. As information volumes grew, the setup confirmed its limits:

- Information Quantity: Our strategy required trying past the audit interval to research historic information that was important for the routine. As this dataset has considerably grown yr on yr, it will definitely exceeded AWS PostgreSQL limits. This compelled us to separate the information throughout two separate databases, introducing further operational overhead and price.

- Information Switch: Transferring and copying information from a manufacturing surroundings to a ‘ring-fenced’ analytics PostgreSQL database induced a delayed begin and a scarcity of freshness and agility.

- Question Efficiency Degradation: Whereas PostgreSQL does help parallelism, it doesn’t leverage a number of CPU cores when executing a single question, resulting in suboptimal efficiency.

- Resourcing: As a result of entry to the entity’s analytics surroundings was restricted to their property, we confronted challenges in making the perfect use of our folks and rapidly onboarding new crew members.

Given these constraints, we would have liked a scalable, high-performance answer that may permit environment friendly entry to and processing of knowledge with out compromising safety or governance, enabling decreased ‘machine time’ for faster outcomes.

Why Delta Sharing?

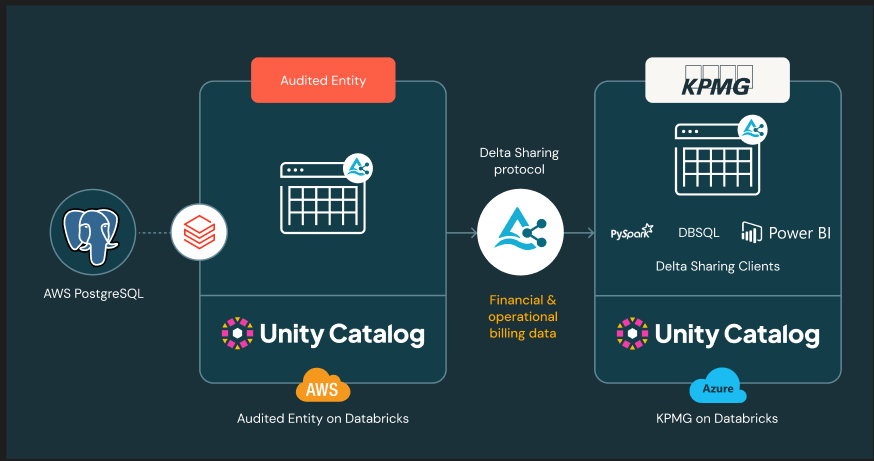

Delta Sharing, an open data-sharing protocol, offered the best answer by enabling safe and environment friendly cross-platform information alternate between KPMG and the audited entity with out duplication.

In comparison with extending PostgreSQL, Databricks provided a number of distinct benefits:

- Handles Giant Datasets: Delta Sharing is designed to deal with petabyte-scale information, eliminating PostgreSQL’s efficiency limitations.

- Decrease prices: Delta Sharing lowered storage and compute prices by decreasing the necessity for large-scale information replication and transfers.

- Flexibility: Shared information could possibly be accessed in Databricks utilizing all of PySpark, SQL, and BI instruments like Energy BI, facilitating seamless integration into our audit deliverables.

- Delta Tables: We might “time journey” to previous states of knowledge. This was priceless for checking historic factors that had been beforehand misplaced within the consumer’s information mannequin.

Implementation Method

We launched Delta Sharing in a means that didn’t disrupt ongoing audit work:

- Information Sharing: We gave the entity a listing (in JSON format) of the tables and views we would have liked. They used Lakeflow Jobs and Delta Sharing to make these accessible to us immediately in our Databricks surroundings. The audited entity offered entry by sharing a key, granting us permission to safe these pre-agreed datasets with minimal effort between AWS and Azure. Delta Sharing dealt with this cross-cloud alternate securely, with out copying or shifting the information between platforms.

- Integration with Unity Catalog: Unity Catalog gave us a single place to handle permissions, apply governance insurance policies, and keep full visibility of who accessed what information.

- Scheduled Information Refreshes: Throughout key audit cycles, information was refreshed to align with monetary reporting timelines.

- Efficiency Optimization: As soon as inside Databricks, we reworked queries from PostgreSQL to Spark SQL and PySpark. With Delta Sharing offering ruled, ready-to-use information, we centered on optimizing efficiency fairly than managing information motion.

Measurable Influence

We used Delta Sharing to entry and analyze billions of meter readings throughout hundreds of thousands of their buyer accounts., We noticed important enhancements throughout a number of KPIs:

- Quicker queries: Delta Sharing allowed us to make use of extra computing energy for large information duties. A few of our most advanced queries completed over 80% sooner—for instance, going from 14.5 hours to 2.5 hours—in comparison with our previous PostgreSQL course of.

- Improved Audit High quality: By spending much less time ready for machines, we had extra time to give attention to exceptions, uncommon patterns and complicated edge circumstances. This improved our information analytics outcomes by 15 proportion factors in some cases and decreased the burden of any residual sampling.

- Value Financial savings: By utilizing Delta Sharing, we prevented making additional copies of the information. This meant we solely saved and processed what was wanted, which introduced down each storage and compute prices.

- Faster entry: Because the information was provisioned by way of Delta Sharing, there was much less time wasted ready for it to be prepared, permitting us to begin work sooner.

- Simpler Workforce Onboarding: Seamless on-boarding new crew members and broader mixture of coding expertise – SQL and PySpark.

Utilizing Delta Sharing has made a noticeable distinction to our audit course of. We are able to securely entry information throughout cloud platforms-without delays or guide information movement-so our groups at all times work from the newest, single supply of fact. This cross-cloud functionality means sooner audits, extra dependable outcomes for the audited purchasers we work with, and tight management over information entry at each step. — Anna Barrell, Audit accomplice, KPMG UK

Technical Issues

A few technical concerns of working with Databricks that must be thought-about:

• Delta Sharing: As early adopters, some options weren’t but accessible (for instance, sharing materialized views) although we’re excited that these at the moment are refined with the GA launch and we’ll be enhancing our delta sharing options with this performance.

• Lakeflow Jobs: At present, there isn’t any mechanism to verify whether or not an upstream job for a Delta Shared desk has been accomplished. One script was executed earlier than completion and led to an incomplete output, although this was rapidly recognized by way of our completeness and accuracy procedures.

Trying to the Future

Delta Sharing has confirmed to be a game-changer for audit information analytics, enabling environment friendly, scalable, and safe collaboration. Our profitable implementation with the power provider demonstrates the worth of Delta Sharing for purchasers with numerous information sources throughout cloud and platform.

We acknowledge that many organizations retailer a good portion of their monetary information in SAP. This presents an extra alternative to use the identical ideas of effectivity and high quality at a fair better scale.

By means of Databricks’ strategic partnership with SAP, introduced in February of this yr, we are able to now entry SAP information through Delta Sharing. This joint answer, which has change into one in all SAP’s fastest-selling merchandise in a decade, permits us to faucet into this information whereas preserving its context and syntax. By doing so, we are able to guarantee the information stays totally ruled below Unity Catalog and its whole value of possession is optimized. Because the entities we audit progress on their transformation journey, we at KPMG wish to construct on this traction, anticipating the extra advantages it should carry to a streamlined audit course of.