The discourse about to what degree AI-generated code must be reviewed typically feels very binary. Is vibe coding (i.e. letting AI generate code with out trying on the code) good or dangerous? The reply is after all neither, as a result of “it relies upon”.

So what does it rely upon?

Once I’m utilizing AI for coding, I discover myself continuously making little danger assessments about whether or not to belief the AI, how a lot to belief it, and the way a lot work I must put into the verification of the outcomes. And the extra expertise I get with utilizing AI, the extra honed and intuitive these assessments change into.

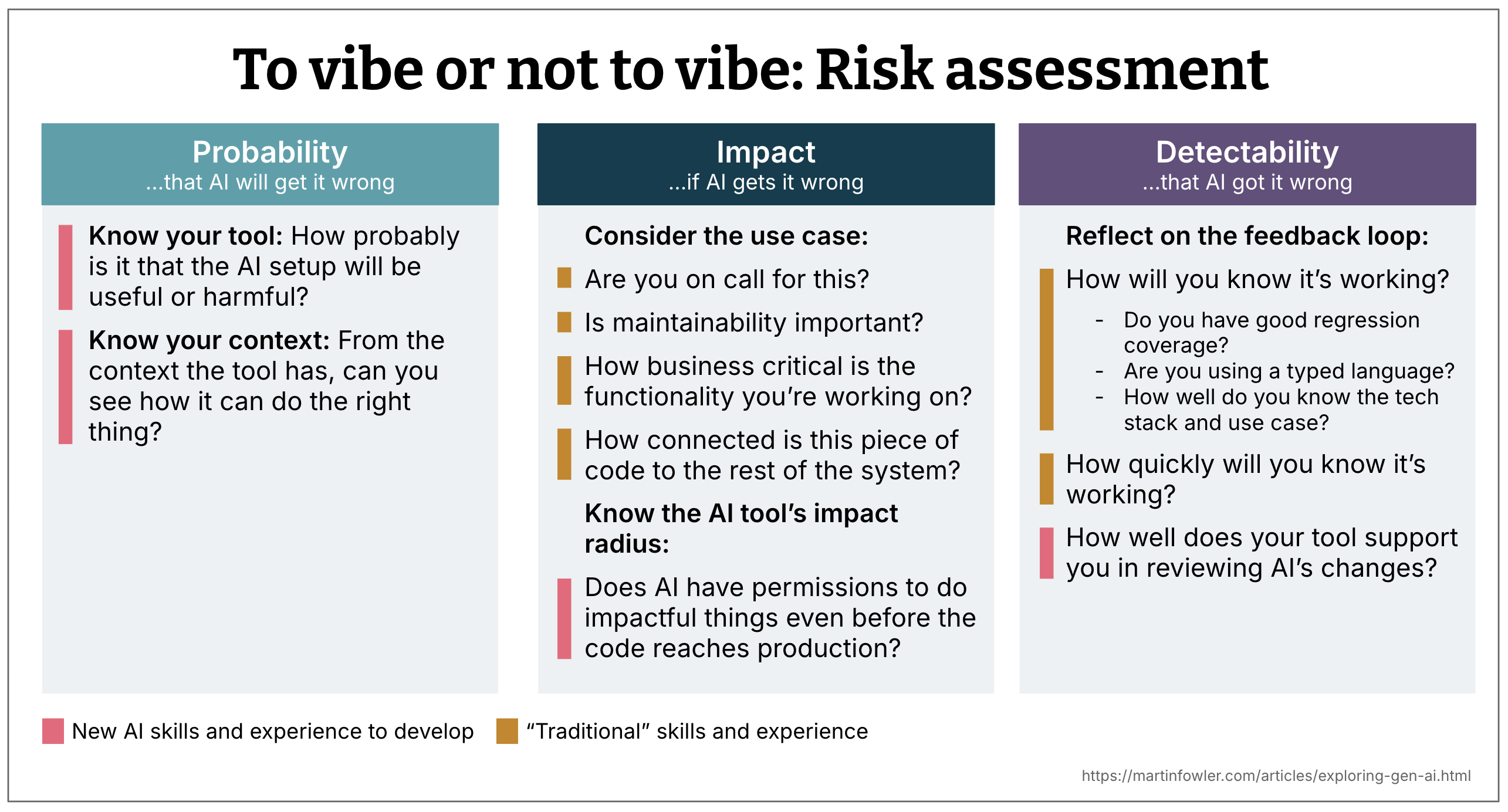

Danger evaluation is often a mix of three elements:

- Likelihood

- Influence

- Detectability

Reflecting on these 3 dimensions helps me resolve if I ought to attain for AI or not, if I ought to overview the code or not, and at what degree of element I try this overview. This additionally helps me take into consideration mitigations I can put in place once I wish to reap the benefits of AI’s velocity, however cut back the danger of it doing the improper factor.

1. Likelihood: How seemingly is AI to get issues improper?

The next are a few of the elements that assist you to decide the chance dimension.

Know your instrument

The AI coding assistant is a operate of the mannequin used, the immediate orchestration taking place within the instrument, and the extent of integration the assistant has with the codebase and the event surroundings. As builders, we don’t have all of the details about what’s going on underneath the hood, particularly after we’re utilizing a proprietary instrument. So the evaluation of the instrument high quality is a mix of figuring out about its proclaimed options and our personal earlier expertise with it.

Is the use case AI-friendly?

Is the tech stack prevalent within the coaching knowledge? What’s the complexity of the answer you need AI to create? How large is the issue that AI is meant to unravel?

You may as well extra typically think about when you’re engaged on a use case that wants a excessive degree of “correctness”, or not. E.g., constructing a display screen precisely based mostly on a design, or drafting a tough prototype display screen.

Pay attention to the out there context

Likelihood isn’t solely in regards to the mannequin and the instrument, it’s additionally in regards to the out there context. The context is the immediate you present, plus all the opposite data the agent has entry to by way of instrument calls and so forth.

-

Does the AI assistant have sufficient entry to your codebase to make a very good determination? Is it seeing the recordsdata, the construction, the area logic? If not, the prospect that it’s going to generate one thing unhelpful goes up.

-

How efficient is your instrument’s code search technique? Some instruments index all the codebase, some make on the fly

grep-like searches over the recordsdata, some construct a graph with the assistance of the AST (Summary Syntax Tree). It might assist to know what technique your instrument of selection makes use of, although in the end solely expertise with the instrument will inform you how effectively that technique actually works. -

Is the codebase AI-friendly, i.e. is it structured in a manner that makes it straightforward for AI to work with? Is it modular, with clear boundaries and interfaces? Or is it an enormous ball of mud that fills up the context window shortly?

-

Is the prevailing codebase setting a very good instance? Or is it a large number of hacks and anti-patterns? If the latter, the prospect of AI producing extra of the identical goes up when you don’t explicitly inform it what the great examples are.

2. Influence: If AI will get it improper and also you don’t discover, what are the results?

This consideration is principally in regards to the use case. Are you engaged on a spike or manufacturing code? Are you on name for the service you might be engaged on? Is it enterprise important, or simply inner tooling?

Some good sanity checks:

- Would you ship this when you had been on name tonight?

- Does this code have a excessive affect radius, e.g. is it utilized by quite a lot of different elements or customers?

3. Detectability: Will you discover when AI will get it improper?

That is about suggestions loops. Do you’ve got good assessments? Are you utilizing a typed language? Does your stack make failures apparent? Do you belief the instrument’s change monitoring and diffs?

It additionally comes right down to your individual familiarity with the codebase. If you realize the tech stack and the use case effectively, you’re extra prone to spot one thing fishy.

This dimension leans closely on conventional engineering expertise: check protection, system information, code overview practices. And it influences how assured you might be even when AI makes the change for you.

A mix of conventional and new expertise

You might need already seen that many of those evaluation questions require “conventional” engineering expertise, others

Combining the three: A sliding scale of overview effort

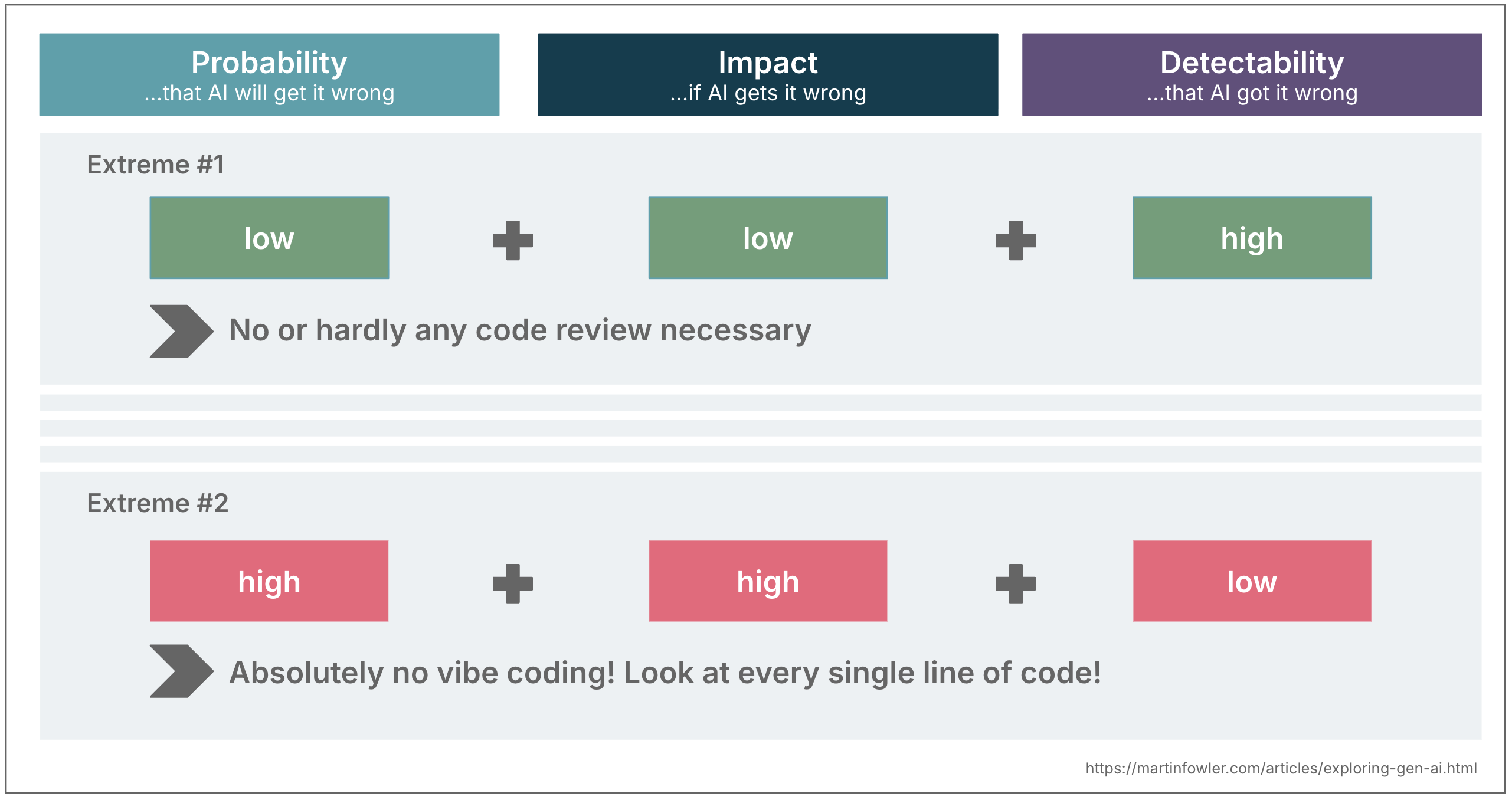

Whenever you mix these three dimensions, they’ll information your degree of oversight. Let’s take the extremes for instance for instance this concept:

- Low chance + low affect + excessive detectability Vibe coding is okay! So long as issues work and I obtain my objective, I don’t overview the code in any respect.

- Excessive chance + excessive affect + low detectability Excessive degree of overview is advisable. Assume the AI may be improper and canopy for it.

Most conditions land someplace in between after all.

Instance: Legacy reverse engineering

We just lately labored on a legacy migration for a shopper the place step one was to create an in depth description of the prevailing performance with AI’s assist.

-

Likelihood of getting improper descriptions was medium:

-

Instrument: The mannequin we had to make use of typically did not observe directions effectively

-

Out there context: we didn’t have entry to all the code, the backend code was unavailable.

-

Mitigations: We ran prompts a number of instances to identify examine variance in outcomes, and we elevated our confidence degree by analysing the decompiled backend binary.

-

-

Influence of getting improper descriptions was medium

-

Enterprise use case: On the one hand, the system was utilized by hundreds of exterior enterprise companions of this group, so getting the rebuild improper posed a enterprise danger to popularity and income.

-

Complexity: Then again, the complexity of the appliance was comparatively low, so we anticipated it to be fairly straightforward to repair errors.

-

Deliberate mitigations: A staggered rollout of the brand new utility.

-

-

Detectability of getting the improper descriptions was medium

-

Security internet: There was no current check suite that may very well be cross-checked

-

SME availability: We deliberate to herald SMEs for overview, and to create a characteristic parity comparability assessments.

-

And not using a structured evaluation like this, it might have been straightforward to under-review or over-review. As a substitute, we calibrated our strategy and deliberate for mitigations.

Closing thought

This type of micro danger evaluation turns into second nature. The extra you employ AI, the extra you construct instinct for these questions. You begin to really feel which adjustments might be trusted and which want nearer inspection.

The objective is to not gradual your self down with checklists, however to develop intuitive habits that assist you to navigate the road between leveraging AI’s capabilities whereas lowering the danger of its downsides.